6.0 KiB

Chandra

An OCR model for complex documents — handwriting, tables, math equations, and messy forms.

Benchmarks

Overall scores on the olmocr bench:

Hosted API

A hosted API with additional accuracy improvements is available at datalab.to. Try the free playground without installing.

Community

Join Discord to discuss development and get help.

Quick Start

pip install chandra-ocr

# Start vLLM server, then run OCR

chandra_vllm

chandra input.pdf ./output

# Or use HuggingFace locally

chandra input.pdf ./output --method hf

# Interactive web app

chandra_app

Python:

from chandra.model import InferenceManager

from chandra.input import load_pdf_images

manager = InferenceManager(method="hf")

images = load_pdf_images("document.pdf")

results = manager.generate(images)

print(results[0].markdown)

How it Works.

- Two inference modes: Run locally via HuggingFace Transformers, or deploy a vLLM server for production throughput

- Layout-aware output: Every text block, table, and image comes with bounding box coordinates

- Structured formats: Output as Markdown, HTML, or JSON with full layout metadata

- 40+ languages supported

What It Handles

Handwriting — Doctor notes, filled forms, homework. Chandra reads cursive and messy print that trips up traditional OCR.

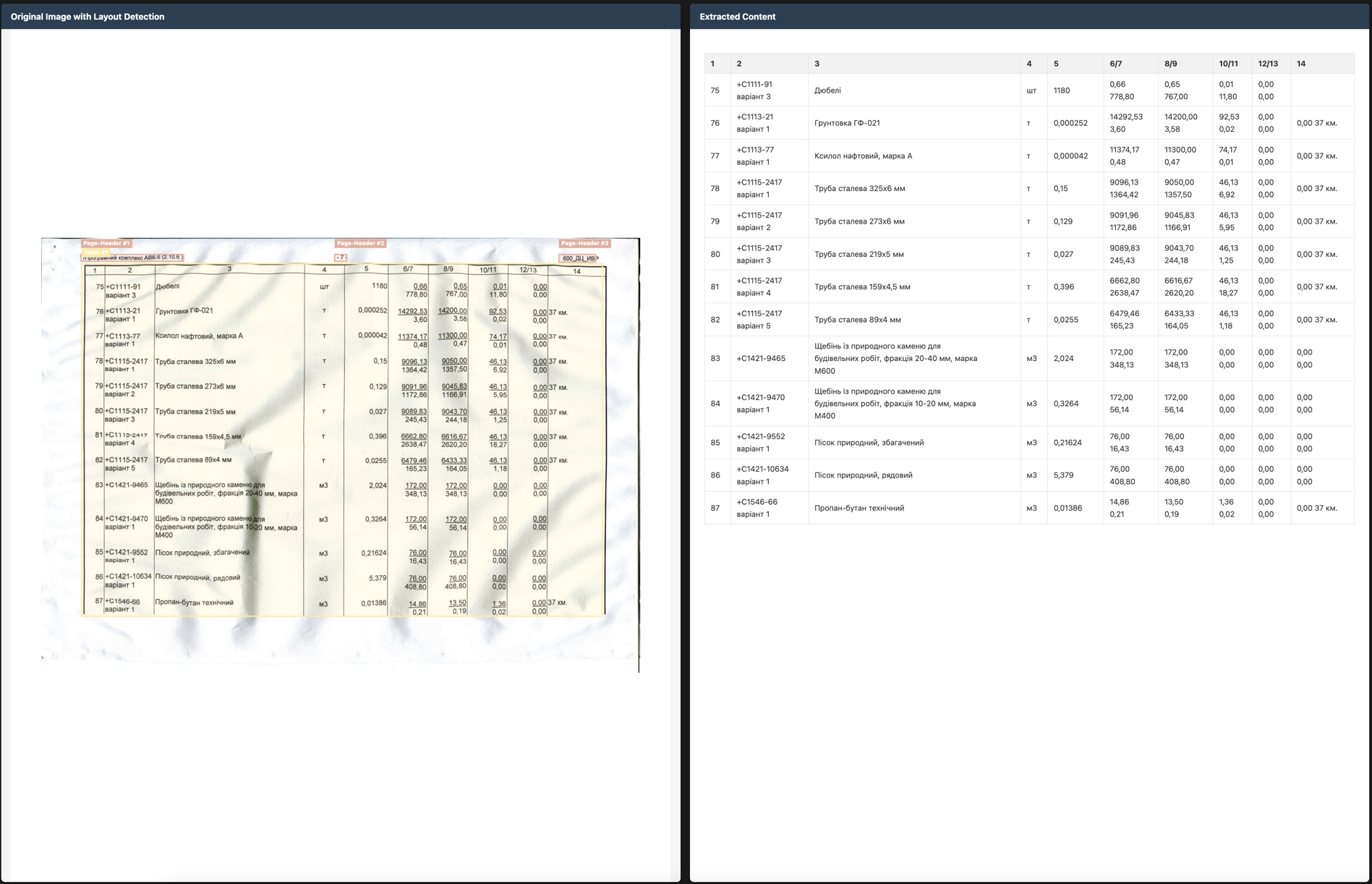

Tables — Preserves structure including merged cells (colspan/rowspan). Works on financial filings, invoices, and data tables.

Math — Inline and block equations rendered as LaTeX. Handles textbooks, worksheets, and research papers.

Forms — Reconstructs checkboxes, radio buttons, and form fields with their values.

Complex Layouts — Multi-column documents, newspapers, textbooks with figures and captions.

Examples

Handwriting |

Tables |

Math |

Newspapers |

More examples

| Type | Name | Link |

|---|---|---|

| Tables | 10K Filing | View |

| Forms | Lease Agreement | View |

| Handwriting | Math Homework | View |

| Books | Geography Textbook | View |

| Books | Exercise Problems | View |

| Math | Attention Diagram | View |

| Math | Worksheet | View |

| Newspapers | LA Times | View |

| Other | Transcript | View |

| Other | Flowchart | View |

Installation

pip install chandra-ocr

For HuggingFace inference, we recommend installing flash attention for better performance.

From source:

git clone https://github.com/datalab-to/chandra.git

cd chandra

uv sync

source .venv/bin/activate

Usage

CLI

# Single file with vLLM server

chandra input.pdf ./output --method vllm

# Directory with local model

chandra ./documents ./output --method hf

Options:

--method [hf|vllm]: Inference method (default: vllm)--page-range TEXT: Page range for PDFs (e.g., "1-5,7,9-12")--max-output-tokens INTEGER: Max tokens per page--max-workers INTEGER: Parallel workers for vLLM--include-images/--no-images: Extract and save images (default: include)--include-headers-footers/--no-headers-footers: Include page headers/footers (default: exclude)--batch-size INTEGER: Pages per batch (default: 1)

Output structure:

output/

└── filename/

├── filename.md # Markdown

├── filename.html # HTML with bounding boxes

├── filename_metadata.json

└── images/ # Extracted images

vLLM Server

For production or batch processing:

chandra_vllm

Launches a Docker container with optimized inference. Configure via environment:

VLLM_API_BASE: Server URL (default:http://localhost:8000/v1)VLLM_MODEL_NAME: Model name (default:chandra)VLLM_GPUS: GPU device IDs (default:0)

Configuration

Settings via environment variables or local.env:

MODEL_CHECKPOINT=datalab-to/chandra

MAX_OUTPUT_TOKENS=8192

VLLM_API_BASE=http://localhost:8000/v1

VLLM_GPUS=0

Commercial Usage

Code is Apache 2.0. Model weights use a modified OpenRAIL-M license: free for research, personal use, and startups under $2M funding/revenue. Cannot be used competitively with our API. For broader commercial licensing, see pricing.